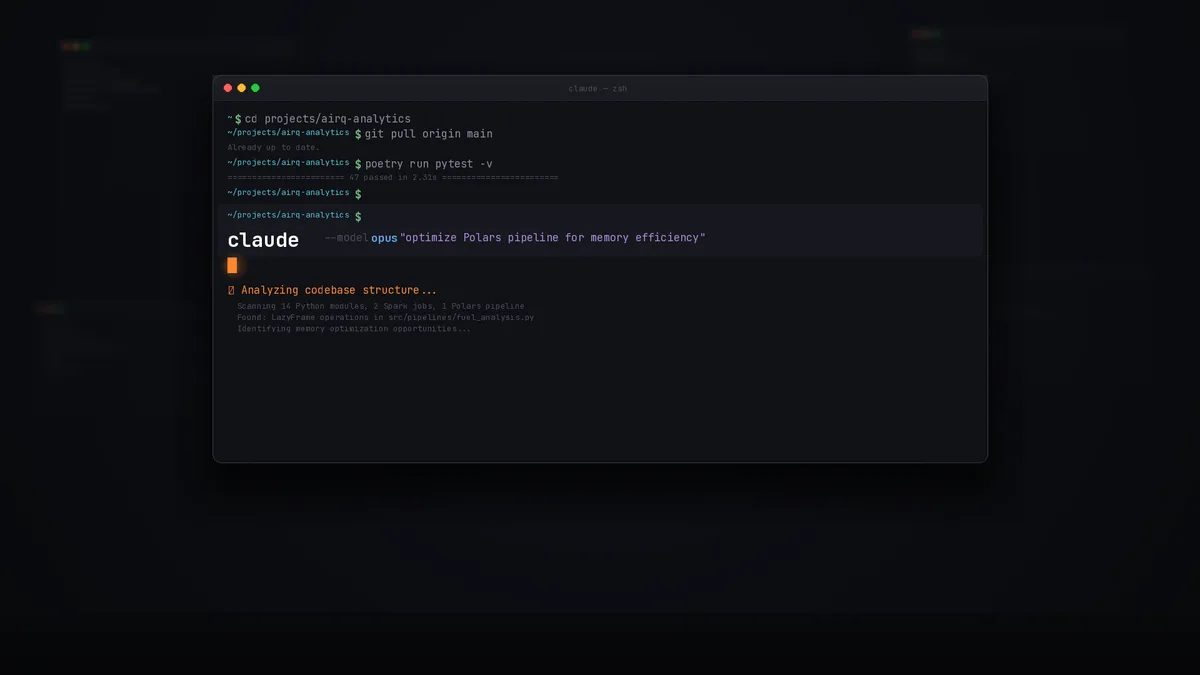

Use a UserPromptSubmit hook to force Claude into evaluating and activating skills before every response.

Claude Code skills are supposed to activate autonomously based on their descriptions. In practice? They often stay dormant, quietly ignored while Claude proceeds with its own approach.

Scott Spence figured this out and shared a brilliant solution in his post How to Make Claude Code Skills Activate Reliably. All credit goes to him for the core idea.

The short version: his "forced eval hook" achieved 84% success rate in activating skills, compared to just 20% with simple instructions. That's a game changer.

Why It Works

The hook injects a mandatory pre-response sequence that forces Claude to:

- Evaluate each available skill explicitly (YES/NO with reasoning)

- Activate relevant skills using the

Skill()tool immediately - Implement only after activation is complete

The key insight is the commitment mechanism. Simple instructions become background noise. Forced evaluation creates accountability through explicit reasoning.

My Modification

I extended Scott's hook to also enforce CLAUDE.md compliance. My global instructions contain important environment setup (like sourcing .envrc before cloud commands), and Claude was occasionally ignoring these too.

The updated hook now has two parts:

- Part A: CLAUDE.md compliance check

- Part B: Skill activation (Scott's original approach)

The Hook

Create .claude/hooks/skill-forced-eval-hook.sh:

#!/bin/bash

# UserPromptSubmit hook that forces explicit skill evaluation and CLAUDE.md adherence

cat <<'EOF'

INSTRUCTION: MANDATORY PRE-RESPONSE SEQUENCE

=== PART A: CLAUDE.MD COMPLIANCE ===

Step A1 - RECALL (do this in your response):

List each instruction from ~/.claude/CLAUDE.md that applies to this task:

- [instruction] - APPLIES/NOT APPLICABLE - [why]

Step A2 - COMMIT (state your commitment):

IF any instructions APPLY → State "Will follow: [list instructions]"

IF none apply → State "No CLAUDE.md instructions apply"

Step A3 - ENFORCE:

You MUST follow committed instructions throughout your response.

Example:

- Source .envrc before cloud commands - APPLIES - using gcloud

- No Co-Authored-By for _databy repos - NOT APPLICABLE - not in _databy

Will follow: Source .envrc before cloud commands

=== PART B: SKILL ACTIVATION ===

Step B1 - EVALUATE (do this in your response):

For each skill in <available_skills>, state: [skill-name] - YES/NO - [reason]

Step B2 - ACTIVATE (do this immediately after Step B1):

IF any skills are YES → Use Skill(skill-name) tool for EACH relevant skill NOW

IF no skills are YES → State "No skills needed" and proceed

Step B3 - IMPLEMENT:

Only after Step B2 is complete, proceed with implementation.

CRITICAL: You MUST call Skill() tool in Step B2. Do NOT skip to implementation.

The evaluation (Step B1) is WORTHLESS unless you ACTIVATE (Step B2) the skills.

Example of correct sequence:

- research: NO - not a research task

- svelte5-runes: YES - need reactive state

- sveltekit-structure: YES - creating routes

[Then IMMEDIATELY use Skill() tool:]

> Skill(svelte5-runes)

> Skill(sveltekit-structure)

[THEN and ONLY THEN start implementation]

EOFMake it executable:

chmod +x .claude/hooks/skill-forced-eval-hook.shConfiguration

Add the hook to your .claude/settings.json:

{

"hooks": {

"UserPromptSubmit": [

{

"hooks": [

{

"type": "command",

"command": ".claude/hooks/skill-forced-eval-hook.sh"

}

]

}

]

}

}Results

This has been a game changer in my workflow. Claude now consistently:

- Checks and follows my global instructions from

CLAUDE.md - Evaluates available skills before diving into implementation

- Actually uses the skills I've carefully crafted

The aggressive language in the hook ("MANDATORY," "WORTHLESS," "CRITICAL") isn't accidental. It prevents Claude from treating the evaluation as optional and skipping straight to implementation.

Thanks again to Scott Spence for sharing this approach. Sometimes the best solutions are the ones that force explicit commitment rather than hoping for implicit understanding.